I’m going to start by saying I’m totally new to LLMs and running them locally, so I’m not going to pretend like I know what I am doing. I’ve been learning about Ollama for some time now and thought I would share it with my readers as always. This is such an interesting topic and I’m ready to go into the rabbit hole.

As always, if you find the content useful, don’t forget to press the ‘clap’ button. This is one way for me to know that you like this type of content, which means a lot to me. So, let's get started.

Large Language Models (LLMs)

LLMs, or Large Language Models, are a type of artificial intelligence designed to process and generate natural language. They are trained on vast amounts of text data, enabling them to understand context, identify patterns, and produce human-like responses. These models can perform various tasks such as answering questions, translating languages, summarising text, generating creative content, and assisting with coding. LLMs have gained significant attention in recent years due to their impressive performance and versatility.

Ollama

Ollama makes installing and running Large Language Models very easy, allowing us to deploy models locally within minutes. With Ollama, you can quickly run an LLM without having to go through the complex process of training and fine-tuning a model from scratch. Simply install Ollama, select your desired local LLM, and you're up and running in no time.

Ollama is available for Windows, Mac, and Linux, making it accessible to a wide range of users. In this example, I'm running Ollama on my Mac (M3 Pro with 18GB of RAM).

Installing Ollama is as simple as visiting the official website and downloading the installation package for your operating system. Once installed, you can easily install a local LLM of your choice, such as llama3.2, which I started with by default. Running llama3.2 in my command line has been very responsive and quick, making it an excellent starting point for my experiments.

But, Why Though?

You might be thinking, "Why do I need a local LLM when I already have access to powerful tools like ChatGPT or Claude?" Well, let me explain.

Running LLMs locally offers several advantages over using cloud-based models like ChatGPT or Claude. One of the main benefits is privacy. When running a model locally, all data stays on your machine, ensuring sensitive or personal information isn't sent to external servers. This is particularly important for businesses or individuals handling confidential data.

Another key benefit is the ability to access and use local LLMs offline. You don't need an active Internet connection to interact with the model, making it reliable in situations where connectivity is limited or unavailable. Additionally, running models locally gives you more control over the environment, such as customising the model or adjusting its behaviour to better suit specific use cases. While cloud models are convenient, local LLMs provide more privacy, independence, and flexibility.

Installation and Setup

As I mentioned earlier, installing Ollama is a breeze - simply download the installation file from Ollama's website and run it.

For Linux, there is a handy script and it took around a couple of minutes to complete.

curl -fsSL https://ollama.com/install.sh | sh>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Creating ollama user...

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

Created symlink /etc/systemd/system/default.target.wants/ollama.service

→ /etc/systemd/system/ollama.service.

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.Once installed, you'll need to choose which model to run. There are many models available for specific tasks, and some of them require significant resources to run, such as 128GB of RAM or a dedicated GPU.

When I installed Ollama, I was prompted to run llama3.2, a model from Meta that comes with smaller and larger variants (1B and 3B). For this model (3B), 16GB or even 8GB of RAM should be enough.

- 7b models generally require at least 8GB of RAM

- 13b models generally require at least 16GB of RAM

- 70b models generally require at least 64GB of RAM

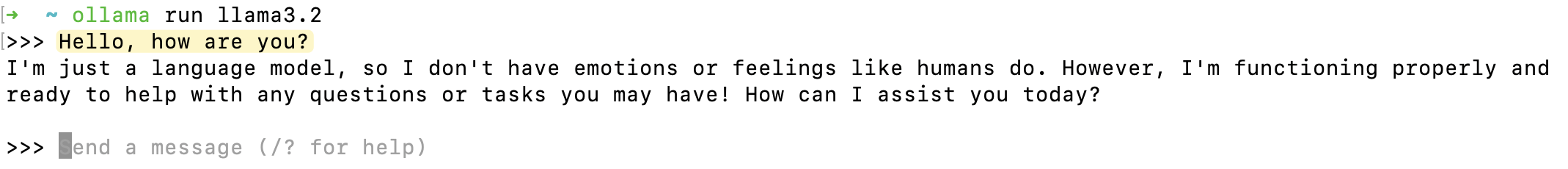

After installation, I opened the Ollama app, which ran in the background without a GUI. Next, I opened my terminal and ran the command ollama run llama3.2. This told Ollama to pull the model and then launched me into a shell where I could interact with it.

Basic Usage

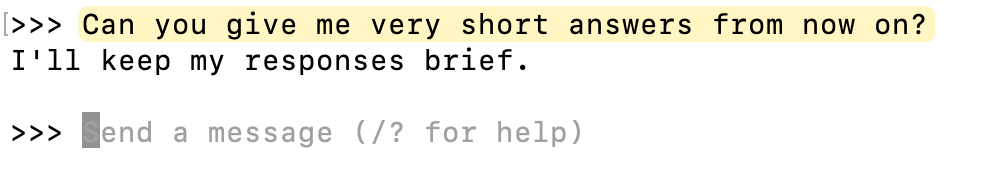

In this shell, you can type commands and receive responses from the model, just like how you would interact with ChatGPT.

Once you are done, you can exit out by running the command \bye

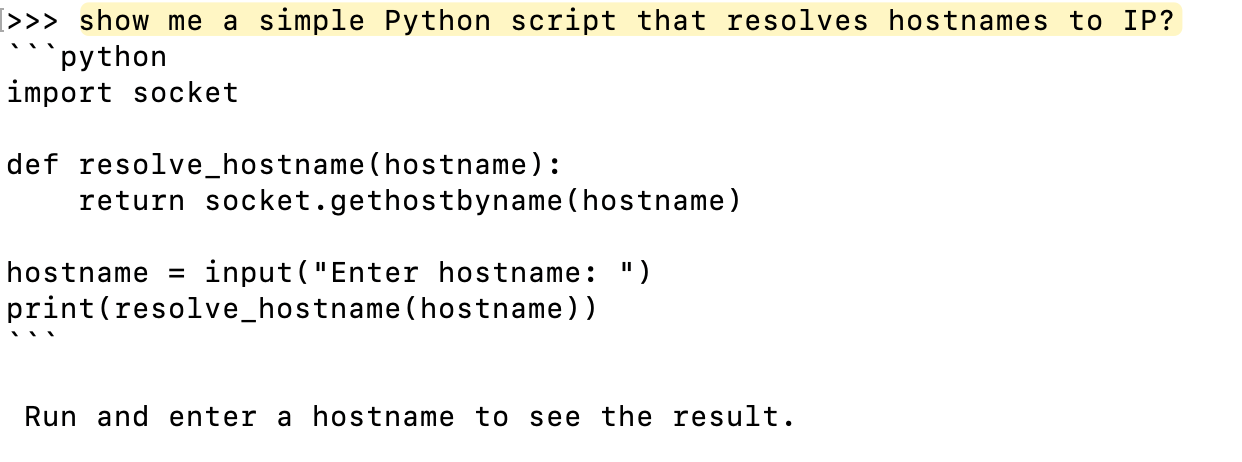

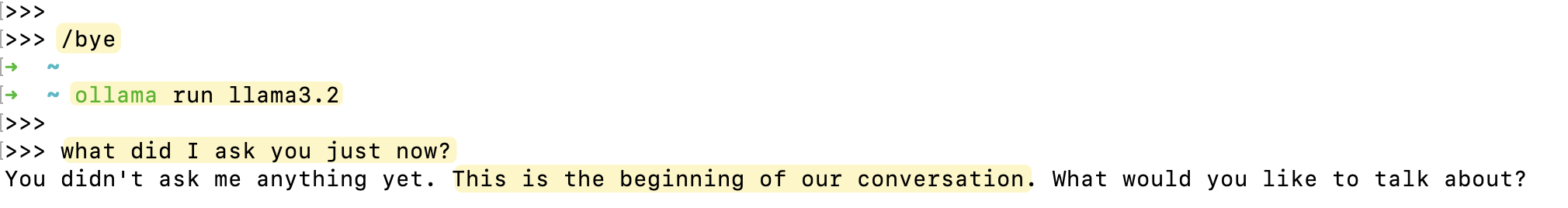

>>> /bye

➜ ~ When you use /bye and close the session in the terminal, your chat history is not saved. If you re-run the model, it won’t have any memory of your previous discussions, and each session will start fresh without context from earlier interactions.

Run Another Model (Code Llama)

Let's explore another model that's specifically designed to help us write code - Code Llama. Codellama is a model that helps with coding tasks, such as suggesting code completions and debugging.

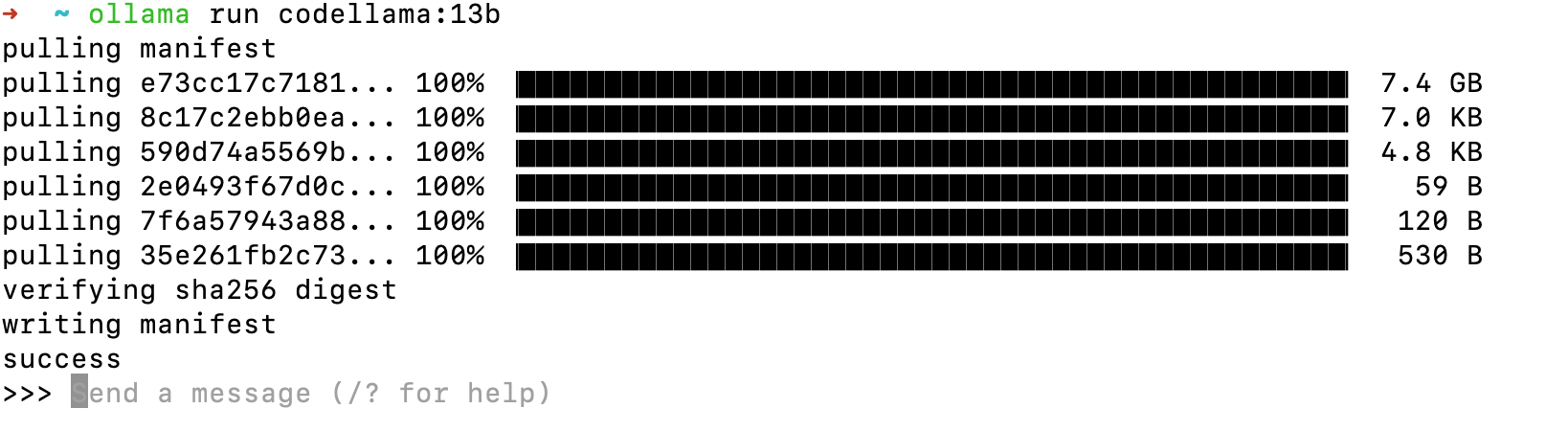

To try it out, I ran the command run codellama:13b, which installed the 13 billion parameter version of the model. This particular version requires around 16GB of RAM. I have 18GB so, I hope I don't crash my laptop 😄

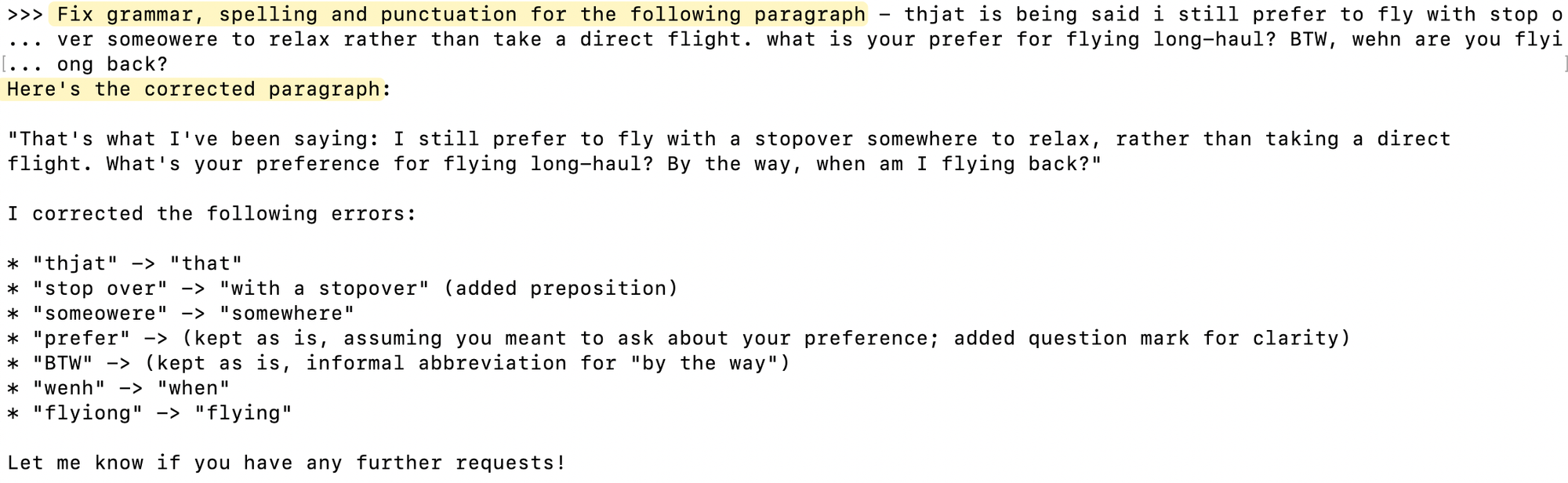

I decided to put some test cases in front of codellama to see how well it performs to the same question with additional features I'd like to have. Specifically, I wanted to test its error handling and multiple hostnames.

show me a simple Python script that resolves hostnames to IP? take error handling into account and also cover having multiple hostnames

import socket

def get_ip(hostname):

try:

return socket.gethostbyname(hostname)

except socket.gaierror as e:

print("Error resolving {}: {}".format(hostname, e))

return None

# Example usage

hostnames = ["google.com", "example.com"]

ips = [get_ip(hostname) for hostname in hostnames]

print("Resolved IPs:", ips)Okay, that's not bad, it gave me what I asked for. Let's go with something related to Network Automation.

Please give me a simple Netmiko script to connect to a device and run two config commands.

Here is an example of a simple Netmiko script that connects to a device, runs two configuration commands, and then closes the

connection:

```python

from netmiko import ConnectHandler

# Define the device parameters

device = {

"host": "192.0.2.1",

"username": "cisco",

"password": "cisco",

"secret": "cisco"

}

# Create a Netmiko connection object

with ConnectHandler(**device) as conn:

# Run the first configuration command

output = conn.send_command("config terminal")

print(output)

output = conn.send_command("interface FastEthernet0/1")

print(output)

output = conn.send_command("ip address 192.0.2.2 255.255.255.0")

print(output)

# Run the second configuration command

output = conn.send_command("exit")

print(output)It's almost perfect but missing a key device_type but it's definitely a good start. I would assume most people will use it in a way that helps them write code rather than just relying solely on AI tools.

Ollama Useful Commands

To get started with Ollama, you'll want to familiarize yourself with its various commands. You can run ollama help to view the available commands.

➜ ~ ollama help

Large language model runner

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

stop Stop a running model

pull Pull a model from a registry

push Push a model to a registry

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any commandps: Shows all running models, allowing you to quickly check which ones are currently in use.list: Lists available modelsrun <model_name>: Installs and runs a specific model

Here is a sample output from ollama ps command.

➜ ~ ollama ps

NAME ID SIZE PROCESSOR UNTIL

llama3.2:latest a80c4f17acd5 4.0 GB 100% GPU 3 minutes from now

deepseek-r1:8b 28f8fd6cdc67 7.0 GB 100% GPU 30 seconds from nowClosing Up

Of course, this is not going to be as powerful as ChatGPT, which could be running on a supercomputer, but I just can't believe that I was able to run at least something on my local machine and have the data stay locally.

I'm going to experiment with it for the next few days/weeks and share my experience, so stay tuned. Don’t forget to subscribe to my blog post, so you receive updates directly to your inbox.