When I first started using local LLMs with Ollama, I quickly realised it relies on a command-line interface to interact with the models. It also comes with an API, but let’s be honest, most of us, myself included, prefer a GUI, much like the one ChatGPT provides. There are plenty of options available, but I decided to try Open Web GUI. In this blog post, we’ll explore what Open-WebGUI is and how simple it is to set up a web-based interface for your local LLMs.

As always, if you find this post helpful, press the ‘clap’ button. It means a lot to me and helps me know you enjoy this type of content.

Overview

Ollama is a tool for running local LLMs, offering privacy and control over your data. Out of the box, it lets you interact with models via the terminal or through its API. Installing Ollama is straightforward, and if you’d like a detailed guide, check out my other blog post which is linked below.

This blog post assumes you already have Ollama set up and running. For reference, I’m running this on my MacBook (M3 Pro with 18GB of RAM).

open-webui

Open Web GUI is a web-based interface that allows you to interact with local LLMs in a more user-friendly way. It provides a ChatGPT-like experience, making it easier to work with models without relying on the command line. There are multiple installation methods available, including using Docker, running it directly with Python, or using pre-built binaries for supported platforms.

Open Web GUI also supports installing Ollama as part of a bundled setup. However, I’m not using that option since I already have Ollama installed natively on my system.

Installing open-webui via Docker

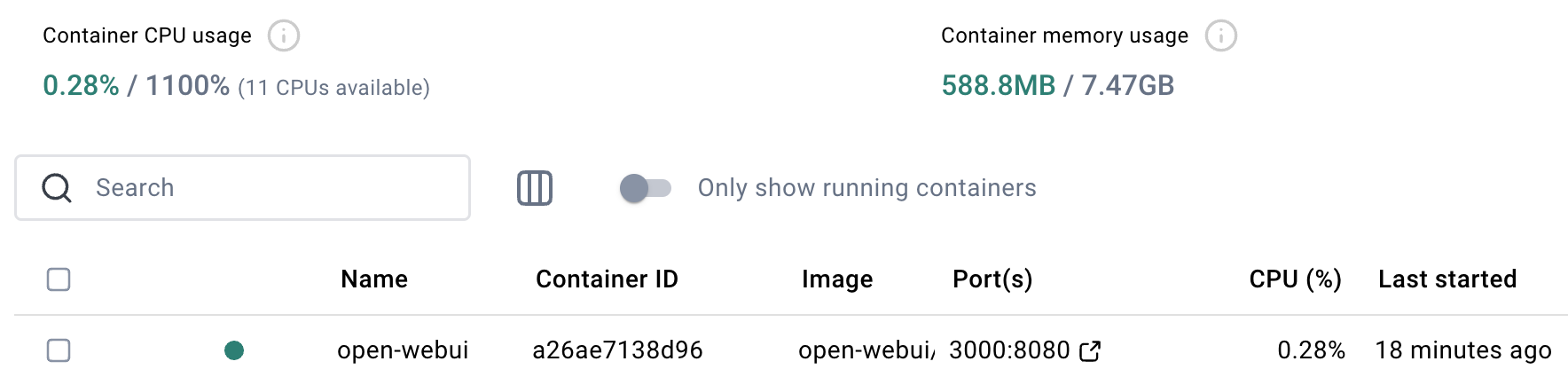

This post assumes you are familiar with Docker. Since I’m running this on my Mac, I have Docker Desktop installed. Installing Open Web GUI is as simple as running the following command (tested on macOS)

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainIn this command, docker run -d starts the container in detached mode, allowing it to run in the background. The -p 3000:8080 maps port 8080 inside the container to port 3000 on your local machine, making the web interface accessible via http://localhost:3000.

The --add-host=host.docker.internal:host-gateway option ensures the container can communicate with your host system. The -v open-webui:/app/backend/data mounts a Docker volume to persist data. Finally, --name open-webui names the container, --restart always ensures it restarts automatically if stopped, and ghcr.io/open-webui/open-webui:main specifies the image to use.

Small issue on Ubuntu 22.04

When I tried to run open-webgui on an Ubuntu server where Ollama is also installed, open-webgui didn't detect the models. After doing some research found a fix here. I ran the following Docker command instead and it worked.

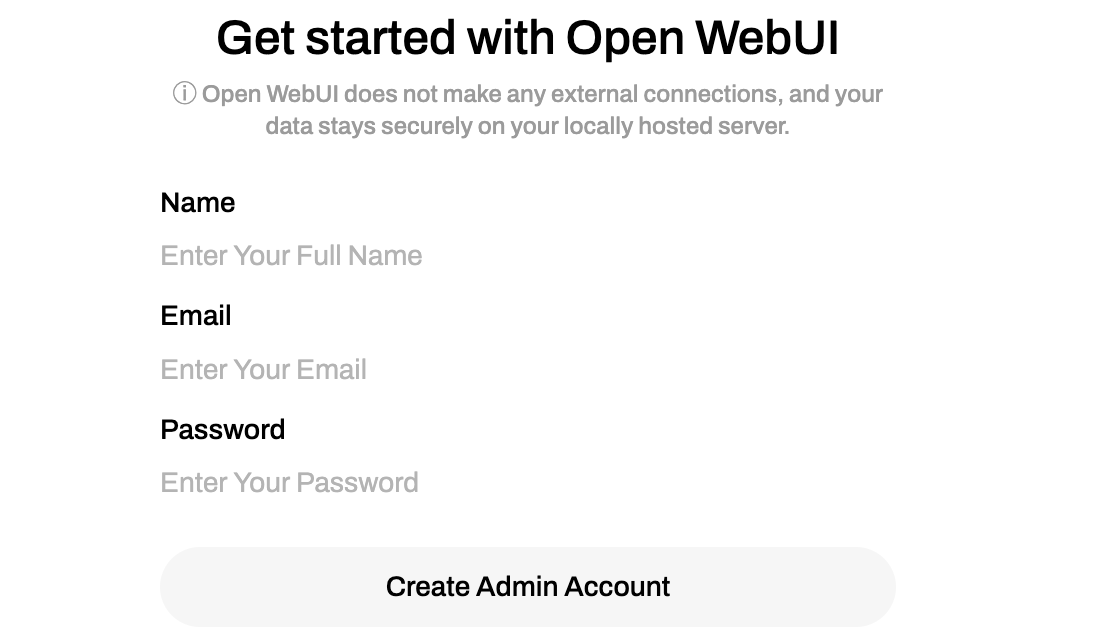

docker run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:mainOnce installed, navigate to http://localhost:3000 ( port 8080 if you used the above command on Linux) in your browser. You’ll need to create a local account and log in.

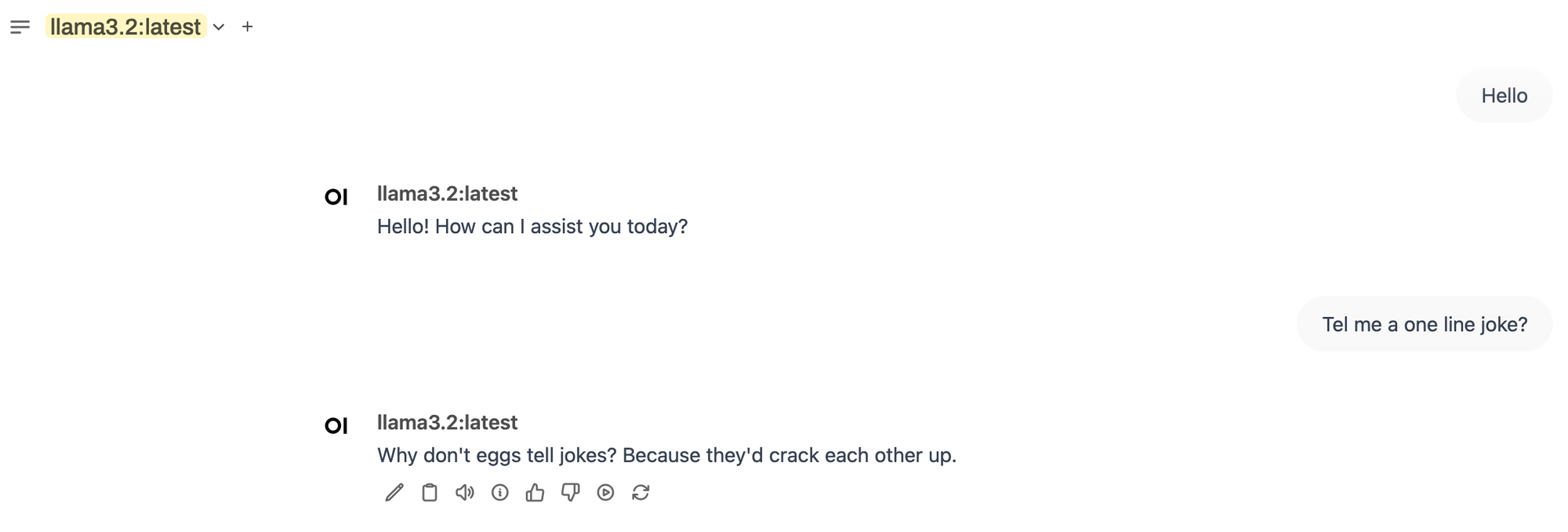

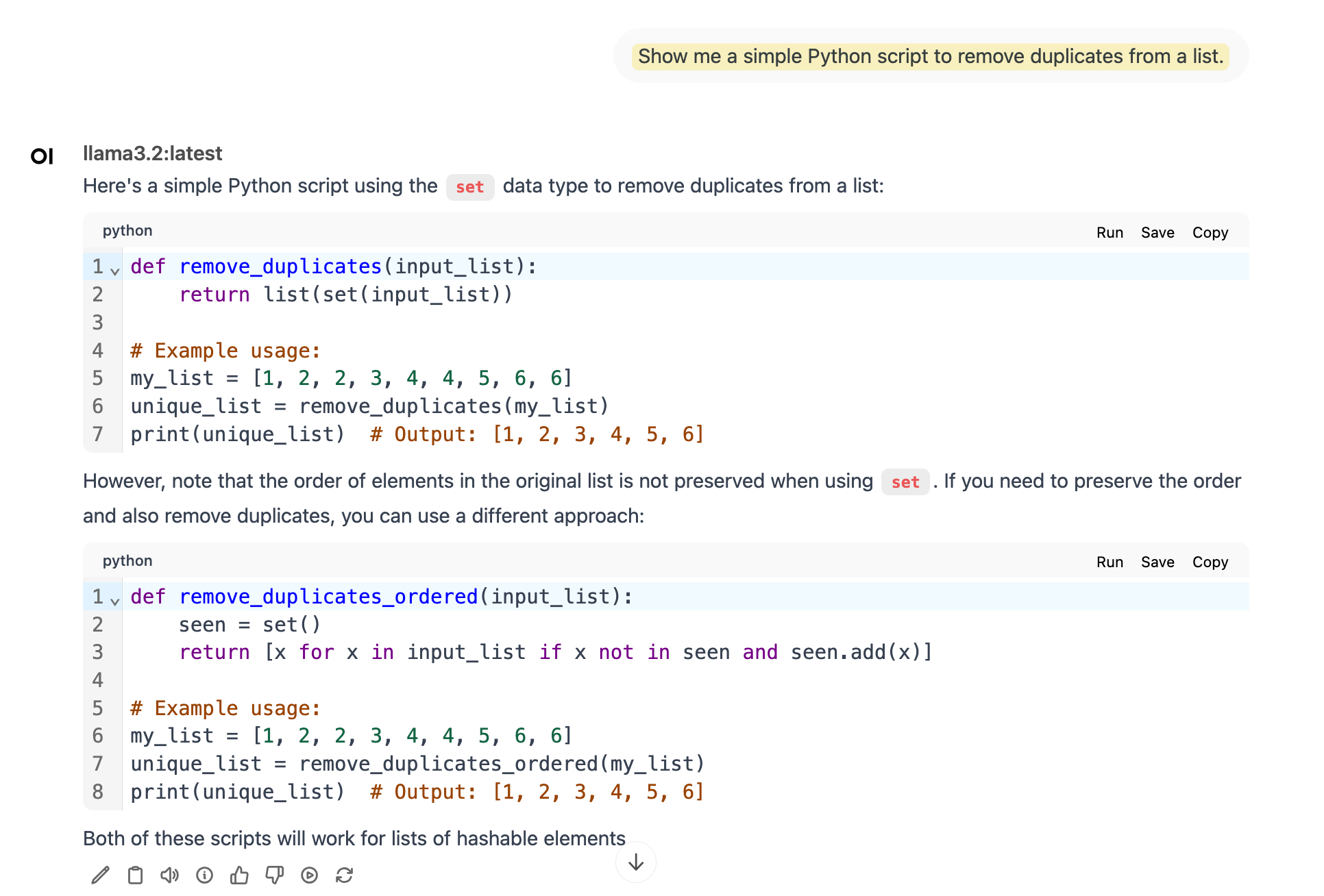

If you already have a model running via Ollama, you can start using it straight away, as shown below.

It also formats the code neatly and presents it in a clean, user-friendly way, similar to other AI tools like ChatGPT or Claude.

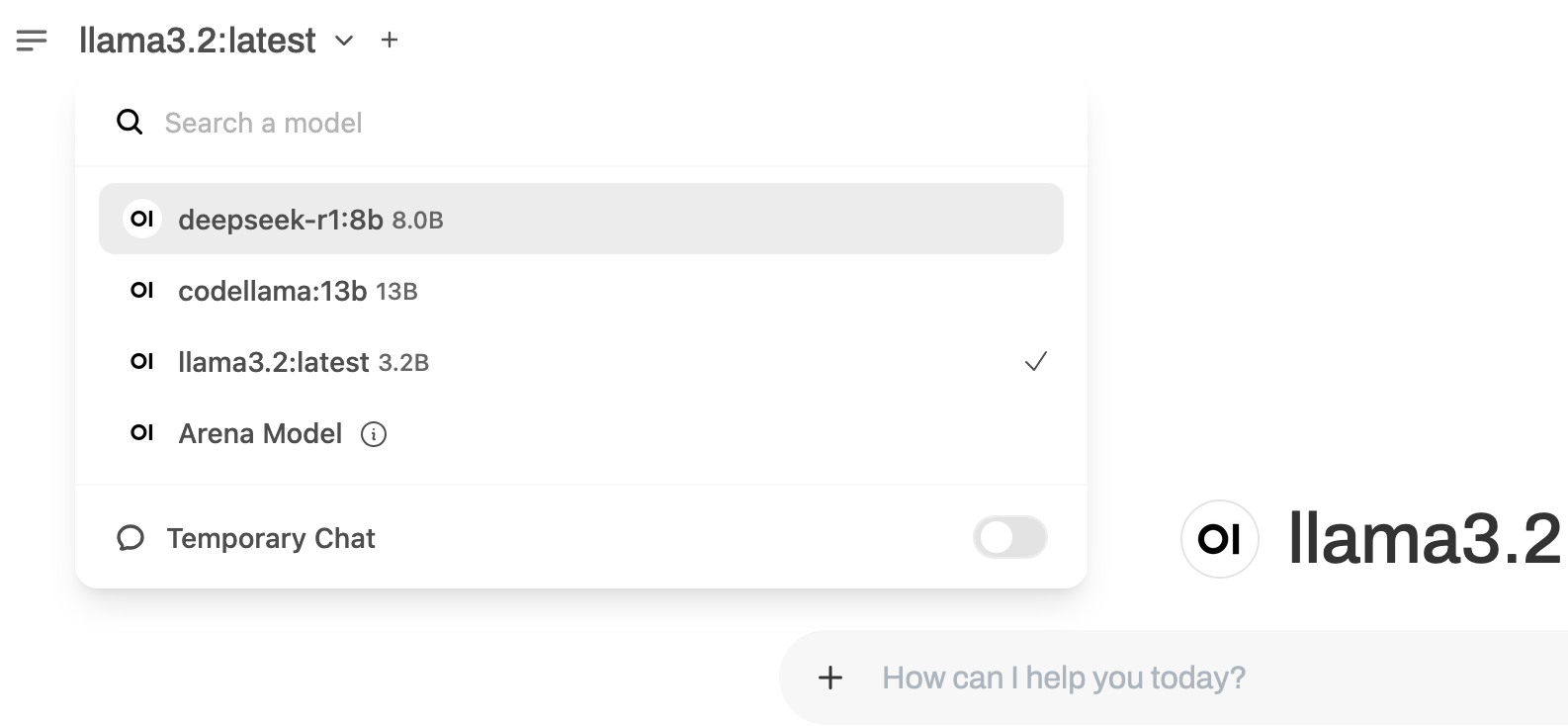

If you have multiple models installed, you can choose which one to use. Open Web GUI can detect the installed models and display them in a dropdown menu, making it easy to switch between them.

Installing open-webui with Python

If you don’t want to use Docker, you can install Open Web GUI with Python. Please ensure you have Python 3.11 or newer, as earlier versions, like 3.10, will not work. As always, it’s a good idea to create a virtual environment before installing.

python3 -m venv venv

source venv/bin/activate

pip install open-webui

After the installation is complete, you can access the interface via http://localhost:8080. The functionality remains exactly the same as the Docker setup.

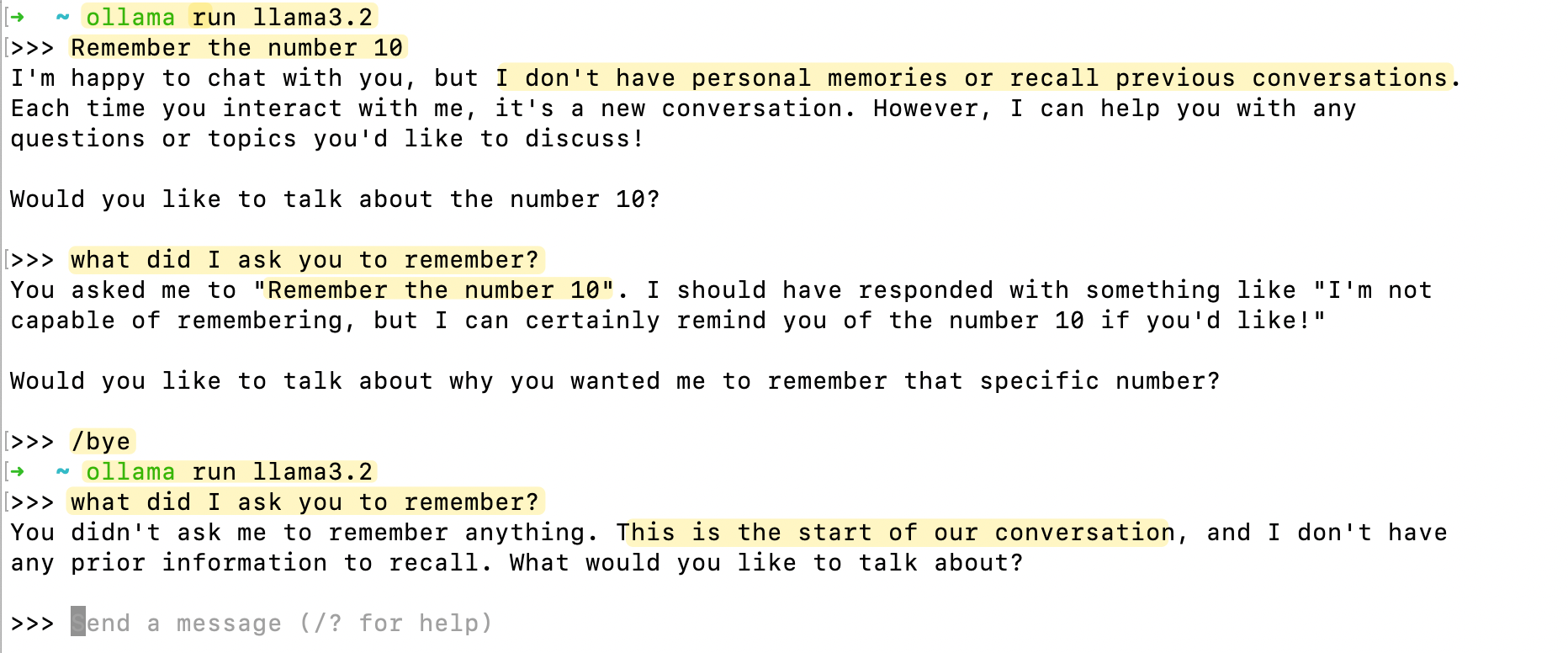

Chat History

When using the terminal to interact with Ollama, the behaviour is quite different from open-webgui. In the terminal, if you end the session with /bye or close the terminal, the chat history is not saved. Each new session starts fresh, and you won’t have access to any previous conversations.

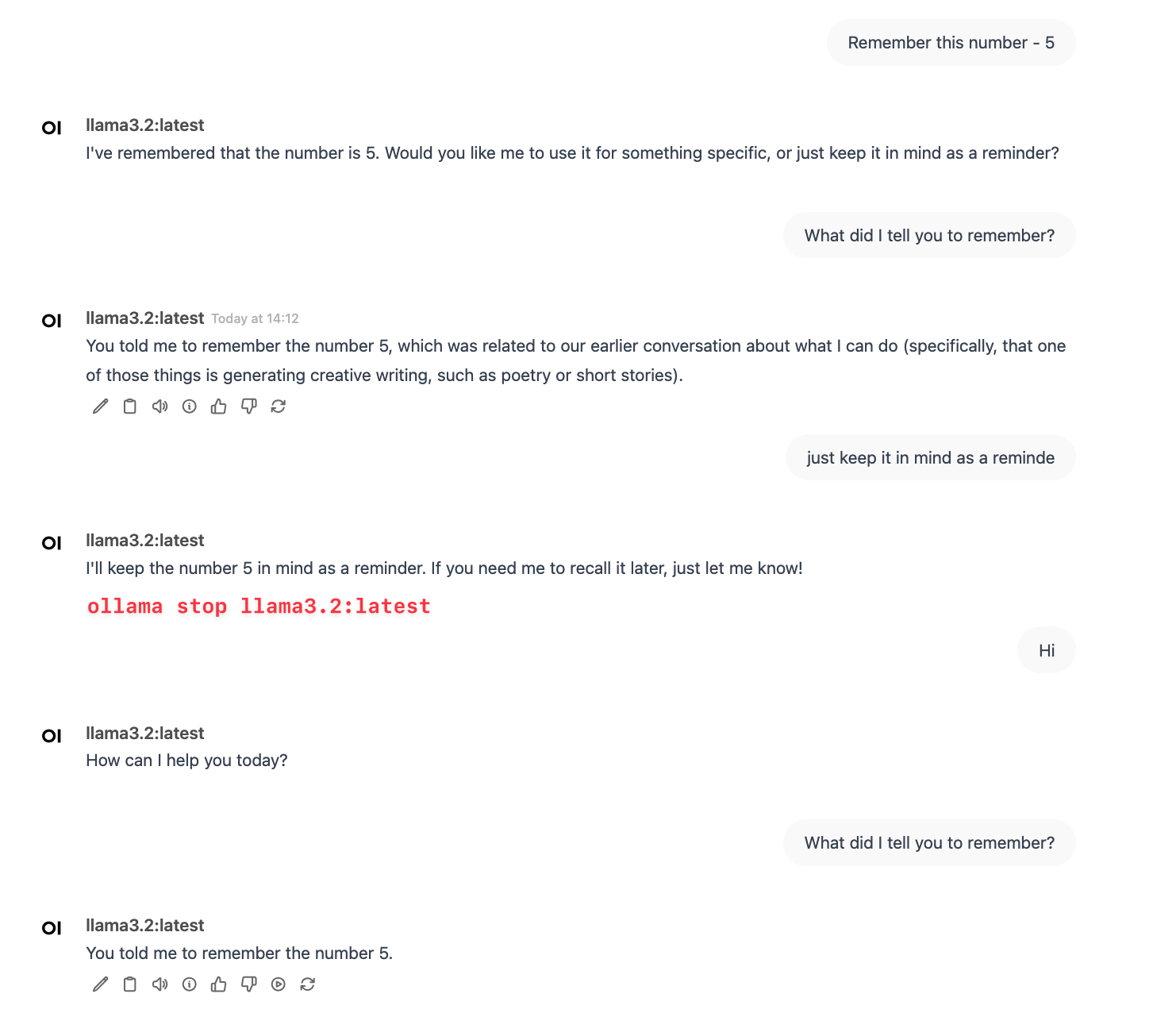

In contrast, open-webgui offers the ability to preserve chat history. If you restart your computer or close and re-open the web interface, your past interactions will still be available.

To test this, I asked the model to remember the number 5. I then stopped the model, restarted it, and returned to the GUI. When I asked the model about the number, it responded correctly.

Of course, it won’t remember all of the chat history due to the limited context window. By default, most local models, including those used with Open Web GUI, have a context window of 2048 tokens. This means the model can only recall and process the last 2048 tokens (which include both input and output) before older messages start getting forgotten.

In contrast, cloud-based models like ChatGPT Plus offer much higher context windows, often extending to tens of thousands of tokens. This allows them to maintain much longer conversations without losing earlier details.

For example, if I start a conversation by saying, I have an Alfa Romeo car and then move on to other topics, after a long discussion, the model may no longer remember that I own a car. This happens because older parts of the conversation eventually fall out of the context window, meaning the model can no longer access that information.

Closing Up

I hope this blog post has been useful. I plan to spend more time exploring Open Web GUI and will update this post with my findings, so stay tuned.