Are you looking to easily upload multiple local files within multiple directories to an S3 bucket in AWS using Terraform? If so, you've come to the right place! In this blog post, we'll walk you through achieving this goal using Terraform.

Terraform is an Infrastructure as a code tool that allows you to automate the deployment and management of resources on various cloud platforms. We'll show you how to create a new S3 bucket in AWS and use Terraform to upload multiple local files, making managing and organising your data in the cloud easy. So, let's dive in and get started!

One of the use cases for this is when you deploy Palo Alto VM-Series firewalls in AWS with bootstrap. Bootstrapping allows the firewall to obtain the licenses and connect back to Panorama. For the bootstrap to work, you need to provide the required files to the firewalls using the exact same folder and file structure shown below.

/config

/content

/software

/license

/plugins

/config

0008C100105-init-cfg.txt

0008C100107-init-cfg.txt

bootstrap.xml

/content

panupv2-all-contents-488-2590

panup-all-antivirus-1494-1969

panup-all-wildfire-54746-61460

/software

PanOS_vm-9.1.0

/license

authcodes

0001A100110-url3.key

0001A100110-threats.key

0001A100110-url3-wildfire.key

/plugins

vm_series-2.0.2If you are not familiar with Terraform, I highly recommend checking my Terraform Introduction post below.

Our ultimate goal here is to create an S3 bucket and then upload all the directories/files shown above with Terraform.

Example

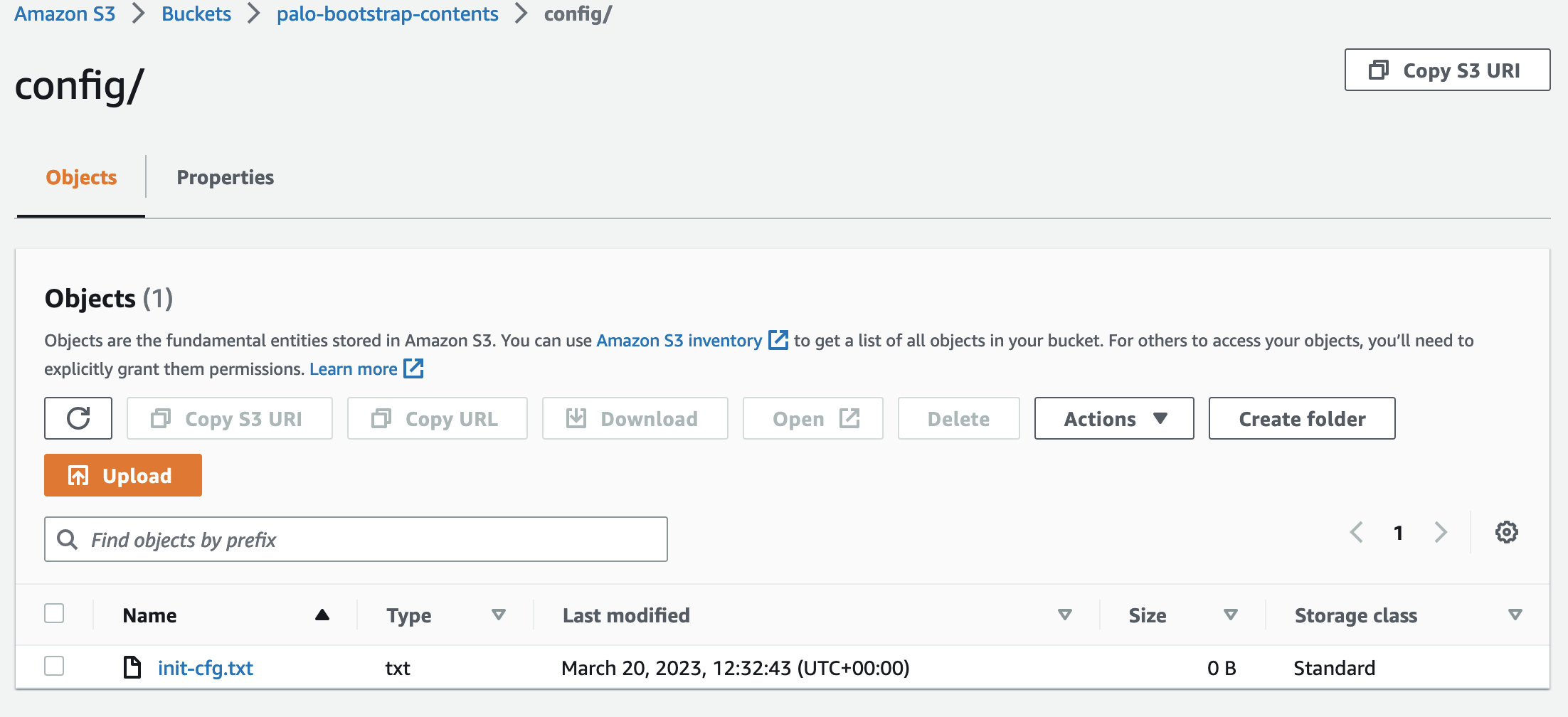

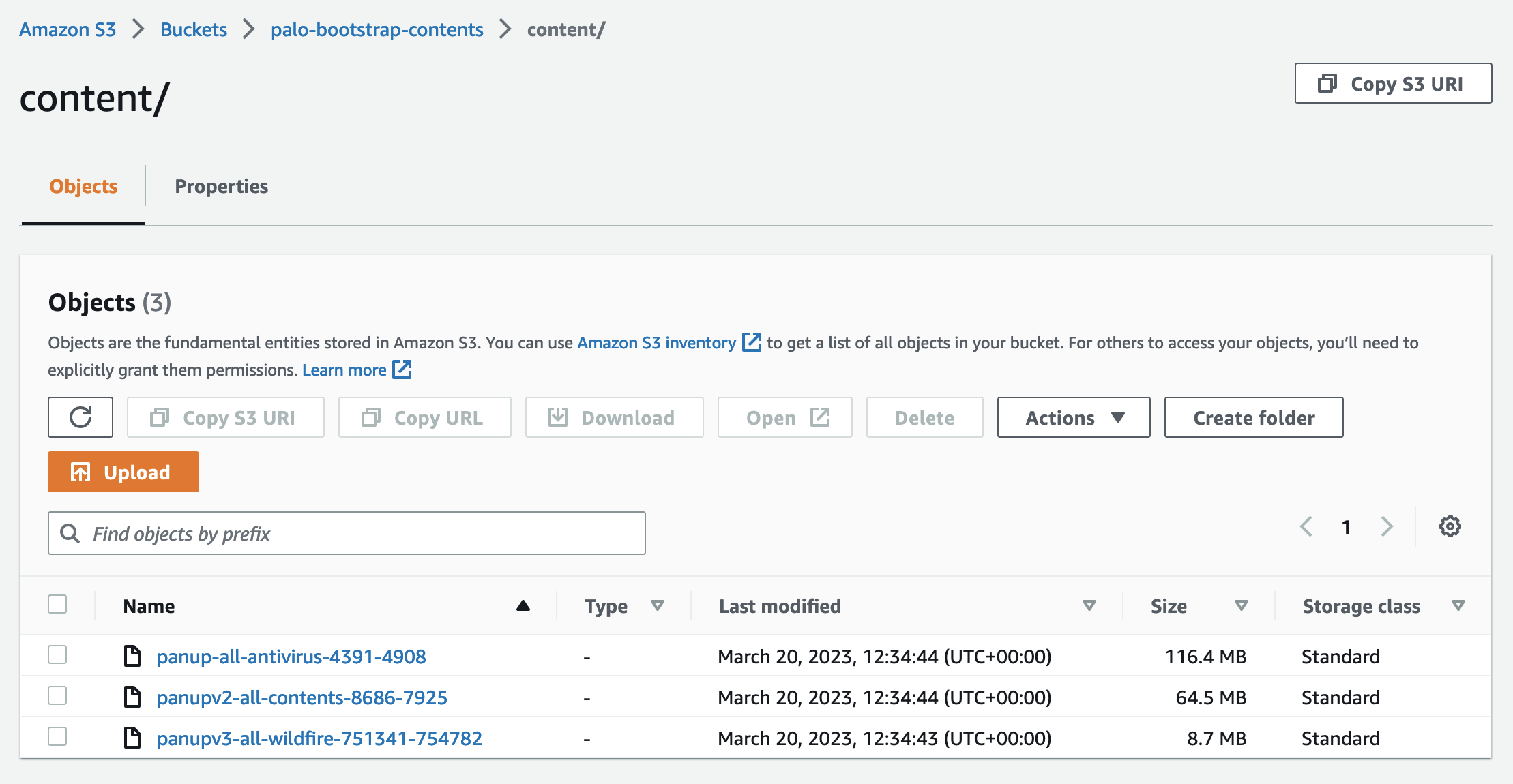

create_s3 directory contains the Terraform configuration files and s3_bootstrap contains the bootstrap files that are going into the bucket.

.

├── create_s3

│ ├── main.tf

│ ├── provider.tf

└── s3_bootstrap

├── config

│ └── init-cfg.txt

├── content

│ ├── panup-all-antivirus-4391-4908

│ ├── panupv2-all-contents-8686-7925

│ └── panupv3-all-wildfire-751341-754782

├── license

│ └── authcodes

└── plugins

└── vm_series-2.0.2

6 directories, 10 filesFileset Function

fileset function returns a set of file paths matching a particular pattern in a particular base directory.

fileset(path, pattern)A few of the supported pattern matches are shown below.

*- matches any sequence of non-separator characters**- matches any sequence of characters, including separator characters?- matches any single non-separator character

To match all the files and sub-directories, we can use the ** pattern as shown below. I'm telling Terraform to go and fetch all the files from the parent directory s3_bootstrap

> fileset("s3_bootstrap", "**")

toset([

"config/init-cfg.txt",

"content/panup-all-antivirus-4391-4908",

"content/panupv2-all-contents-8686-7925",

"content/panupv3-all-wildfire-751341-754782",

"license/authcodes",

"plugins/vm_series-2.0.2",

])You can now combine that with a for_each loop to upload the matching files to the S3 bucket.

Terraform Configurations

provider "aws" {

region = "eu-west-1"

}provider.tf

locals {

s3_bootstrap_filepath = "../s3_bootstrap"

}

resource "aws_s3_bucket" "bootstrap" {

bucket = "palo-bootstrap-contents"

tags = {

Name = "Bootstrap"

}

}

resource "aws_s3_bucket_acl" "acl" {

bucket = aws_s3_bucket.bootstrap.id

acl = "private"

}

resource "aws_s3_bucket_public_access_block" "public_access" {

bucket = aws_s3_bucket.bootstrap.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

resource "aws_s3_object" "bootstrap_files" {

for_each = fileset(local.s3_bootstrap_filepath, "**")

bucket = aws_s3_bucket.bootstrap.id

key = each.key

source = "${local.s3_bootstrap_filepath}/${each.value}"

etag = filemd5("${local.s3_bootstrap_filepath}/${each.value}")

}main.tf

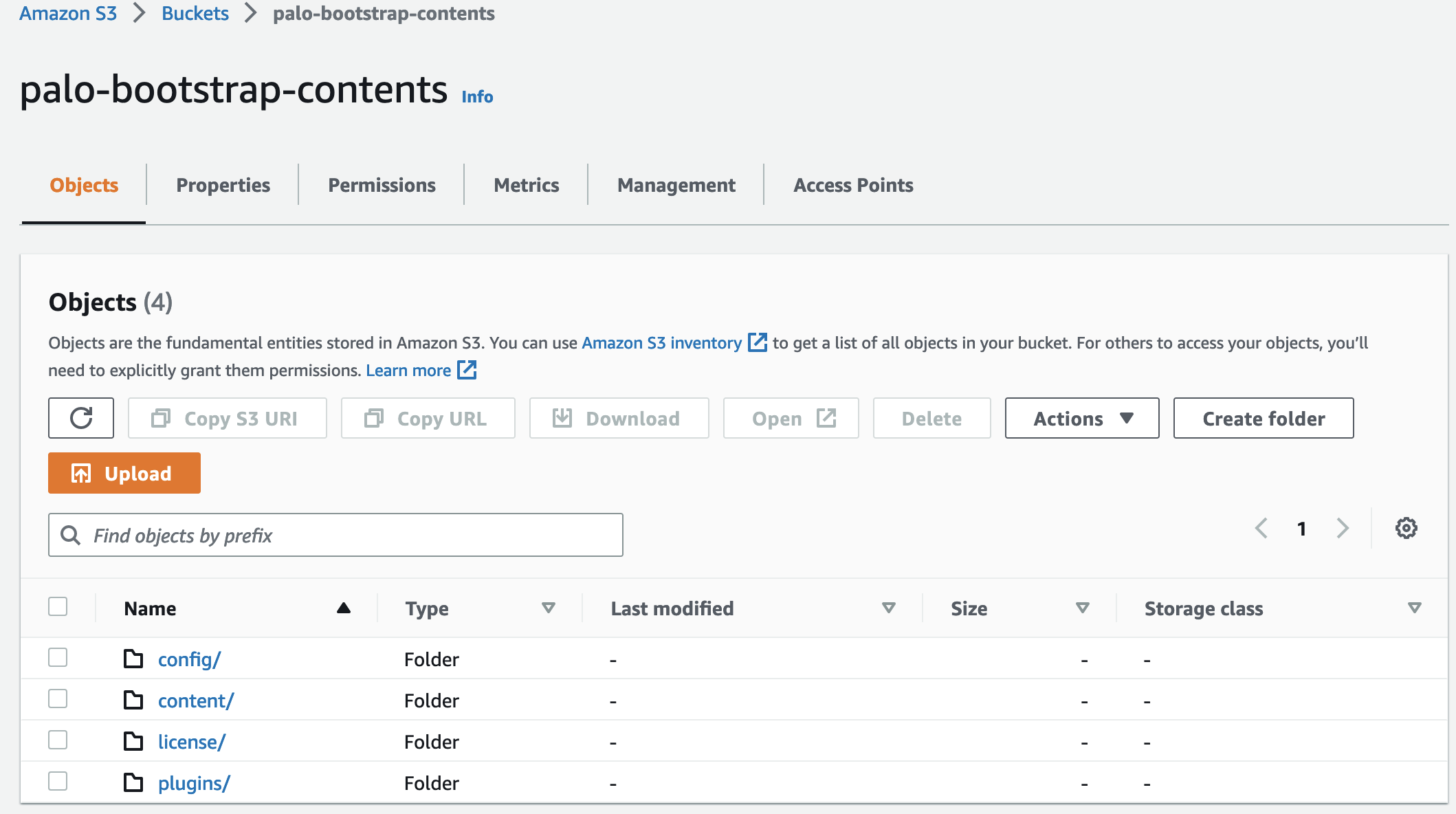

Once you run terraform apply, the objects will be created in the bucket.

You can also check the contents of the bucket using the s3-tree command

suresh@mac:~/Documents/create_s3|⇒ s3-tree palo-bootstrap-contents

palo-bootstrap-contents

├── config

│ └── init-cfg.txt

├── content

│ ├── panup-all-antivirus-4391-4908

│ ├── panupv2-all-contents-8686-7925

│ └── panupv3-all-wildfire-751341-754782

├── license

│ └── authcodes

└── plugins

└── vm_series-2.0.2

4 directories, 6 filesReferences

https://developer.hashicorp.com/terraform/language/functions/fileset